What is Cloud Native?

By Richard Lander, CTO | April 12, 2024

In this blog post we're going to break down what "Cloud Native" means as it relates to software. I promise this won't be just a navel gazing theoretical exercise. There are some tangible benefits to breaking this down as it relates to how you design and implement software systems.

What is the Cloud?

What makes software native to "the cloud?" The cloud is merely a place to rent servers and other compute infrastructure. How does being able to summon on-demand compute infrastructure change how you design and build your software?

The answer lies in the opportunities the cloud presents. Cloud-native software takes advantage of these opportunities and expands the capabilities of your delivery systems. Software design has evolved to take advantage of this.

Before the Cloud

Before we could call an API to provision virtual machines in the cloud, the process of acquiring more compute capacity was much more time consuming and required up-front capital to purchase servers and equipment. As a consequence, the amount of software that could be deployed by an organization was limited. System admins using deployment runbooks and scripts to manage software delivery was feasible. The software itself was usually uncomplicated and had little automation in its delivery and operations.

When more compute capacity became available with a pay-as-you-go model, organizations found themselves able to build and deploy more software. As a consequence, the delivery mechanisms and the software architecture itself evolved. DevOps emerged as the practice used to manage delivery. And Docker brought Linux containers to the mainstream.

What Makes Software Native to the Cloud?

Software that is designed and built to take advantage of modern cloud computing is a good way to think about this. Following are some of the areas where we've seen significant advancement. I've also included what their limitations are and where they fall short. Cloud-native describes more of an evolving spectrum rather than an end state.

Software-Driven Infrastructure

When the cloud providers exposed APIs to enable programmatic infrastructure provisioning, a new world opened up for software delivery. The capability to spin environments up and down arrived. The ability to scale the amount of compute resources in response to end user demand also became possible.

Command line tools like Terraform launched the infrastructure-as-code (IaC) evolution. It enabled teams to declare the state of the infrastructure in a domain specific language (DSL) and use a CLI command to bring that infra to life. This worked really well early in the cloud native evolution with simpler environments. Terraform's DSL, however, is not a programming language, and the requirements across different environments bred a parameterization sprawl that was difficult to contain and manage.

Projects like Pulumi have attempted to remedy this situation by enabling the use of general purpose programming languages in an IaC-inspired manner. However, the overlapping concerns between infrastructure provisioning and workload configuration still don't have a great solution. There is work to be done in the management of cloud infrastructure as a dependency of the applications that need to run there.

Continuous Delivery

With the ability to deploy more software came the need to automate that deployment and, subsequently, ongoing updates to that running software. Humans performing routine tasks were no longer adequate. They were too slow and error-prone and it was thankless, tedious work. So continuous delivery (CD) became popular.

CD systems generally consist of a pipeline of tools that can be connected together to take some input variables and render some configurations that will deploy software to the cloud and update it when a new version is available. State is usually stored in a git repo and some action - such as a branch merge - triggers an operation that deploys or updates some running software.

This automation was a substantial gain, but as systems have become ever more complex and feature rich, the complexity of the configs kept in git and managed by humans with text editors has grown unwieldy. CD can still perform well in some use cases but when it breaks down, it is challenging to troubleshoot and imposes considerable interruptions to operations.

This increasing complexity is driven by a couple of factors. One is the adoption of a microservices architecture. More distinct workloads with more integrations innately leads to increased complexity in delivery configuration. Another is the use of Kubernetes The capabilities of Kubernetes are undeniable, but these capabilities come with significant configuration overhead. These factors lead to an escalating maintenance burden in your CD systems. Keep this in mind when tackling cloud native software.

Microservices

As software development advanced, with the help of the availability of cloud infrastructure, many applications grew into giants with vast codebases and many engineers working concurrently on them. We started to call these apps "monoliths" and they were marked by slow release schedules.

Microservice architecture is an alternative to the monolith. Instead of the business logic for an application residing in a single workload, the functionality is broken into distinct workloads that coordinate over the network. There are terrible pitfalls in this approach but the benefits are compelling in that small teams can develop individual services with greater agility. This is because:

- The automated testing is less of a slog to maintain.

- With a tighter scope, the number of changes to release can be more easily coordinated. There are less merge conflicts with changes other developers have made and smaller changes can be made in isolation.

- Updates to running instances are easier to perform and less error-prone since the number of configurations are fewer for a simpler workload.

- Mishaps in production updates have a smaller blast radius in many cases because only some features in the software are affected.

If the pitfalls are avoided, the benefits to release velocity with microservices can be very worthwhile. For a deeper look at microservices, check out our blog post What are Microservices?

Containers & Kubernetes

Docker popularized containers. This allowed app dependencies to be reliably packaged into a delivery artifact - the container image - which greatly improved delivering multiple workloads to each server in the cloud. More software could be more reliably be deployed with better resource utilization. For a deeper look at Docker and containers see our blog post: What is Docker?

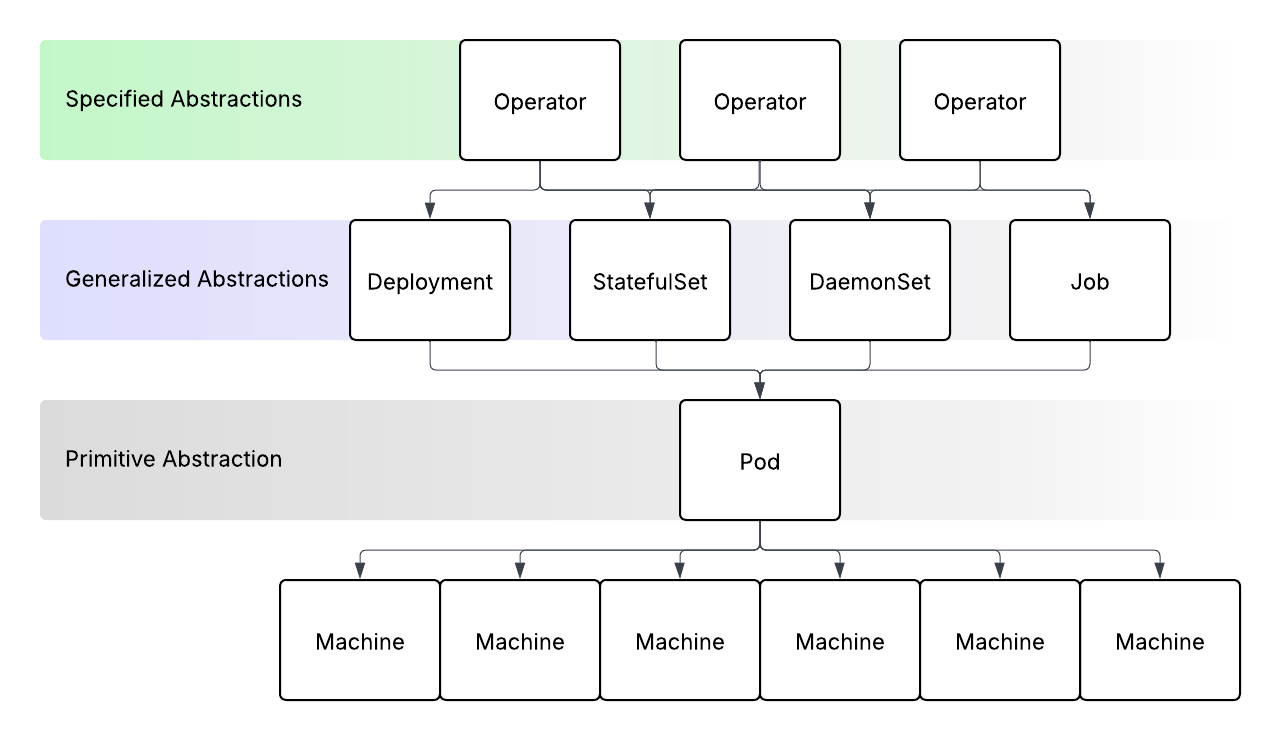

With more applications using containers, the need for a container orchestration system emerged quickly. Even though the app dependencies are reliably contained and we can deploy more apps, managing all the server inventory and container networking remains very challenging without container orchestration. This is where Kubernetes comes in. Now we have a single API to call to deploy and update our workloads. The Kubernetes control plane manages a cluster of servers for us. It schedules containers to any server that has capacity and helps orchestrate the container networking for connectivity. Integrations between Kubernetes and cloud providers have proliferated and we can deploy containerized workloads with much less toil. For more information about Kubernetes, see our blog post: What is Kubernetes?

Kubernetes is complicated in several ways. Managed Kubernetes services have made installing it easier, but still not trivial in most cases. Configuring workloads well is still complicated. And few applications consist of only the containers that constitute the app. They have dependencies on support services running on Kubernetes as well as cloud infrastructure, including some components that are a part of the app stack itself, such as a managed database.

Takeaways

Embracing cloud native capabilities for your software has clear benefits. But like many things, its all in how you navigate it. If a new poker player starts by going all-in on each hand, they'll lose money quickly. So make small bets to begin with. As your team grows, and as your software's requirements grow, you can take advantage of more cloud native capabilities.

- Infrastructure-as-code solutions like Terraform or Pulumi will be useful in early stages. Just know that maintaining these systems of infrastructure management will become challenging to manage, especially as complexity increases and where concerns between infrastructure configuration and workload configuration overlap.

- Continuous delivery systems can enable automation to deploy and update your running systems, but again, complexity containment will be important as your software matures. The more deployment variables you accumulate, the more points of failure can trip you up. If humans are updating text files, there will always be opportunities for typos or missed config transfers between concerns.

- Leveraging microservices is a double-edged sword. There are significant benefits but if you charge down the wrong path, you could end up in a place you didn't bargain for. Consider reading our blog post What are Microservices? to delve into this subject more before taking that challenge on.

- Consider containerizing your software, but know that container orchestration with Kubernetes is non-trivial. Many use simpler alternatives like Docker Compose or a managed service such as Google Cloud Run when getting started. The pitfall there is if you find yourself needing the features of a more capable system like Kubernetes later, you'll be in for a re-platforming effort.

As with all evolving technology, there are limits to what most teams can do with cloud native technology today. There are clear areas for improvement. So be prepared to innovate or find workarounds if you double down on this strategy.

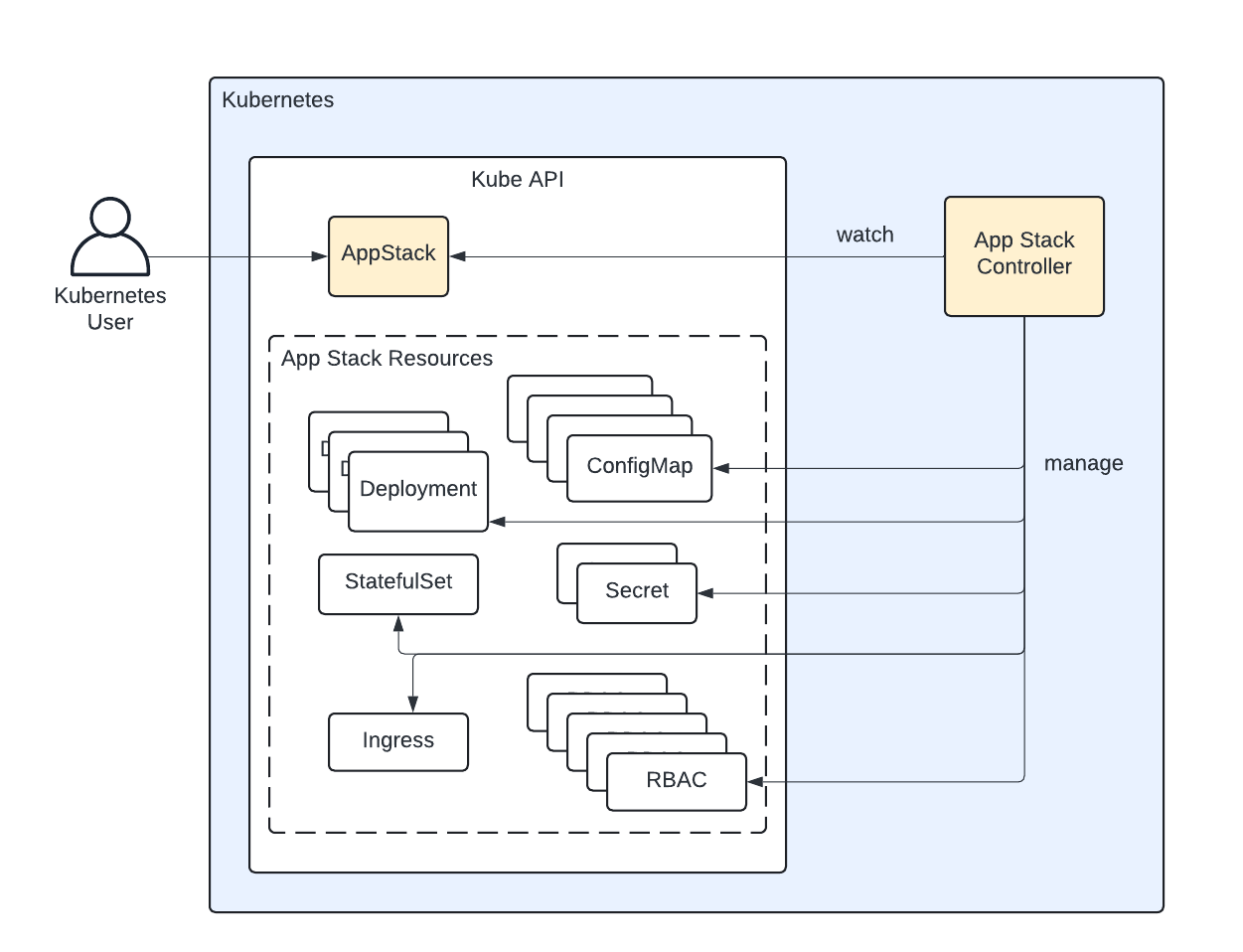

Threeport

Threeport is an application orchestrator designed to enable cloud native adoption while avoiding the traps. It allows users to leverage IaC to get started while giving them a path of evolution when that kind of solution starts to break down. It provides an alternative to CD pipelines that allows you to use the same config tools without getting stranded when those config tools become insufficient. It allows you to manage inter-woven dependencies of a microservice architecture more elegantly and deliver more reliably. And it allows you to get started on Kubernetes while abstracting away much of the complexity. This will help you avoid the possibility of a re-platforming in the future and, instead, grow the sophistication of your delivery systems with your software as it evolves and matures.

If you're employing cloud native systems, we encourage you to try it out. Threeport is open source and freely available.

Qleet

Qleet provides managed Threeport control planes to help you kick-start your efforts in using cloud native technologies with Threeport. It's free to get started using Qleet to manage your Threeport control planes.