What is Docker?

By Richard Lander, CTO | April 5, 2024

Docker is a containerization technology that allows us to package all the dependencies of a workload into an container image. Docker pioneered container technology for software so this article also explains what containers are. In a nutshell, containers allow you to run multiple workloads on a server with isolation at the kernel level. Docker is now just one implementation of container standards for building and running containers.

Dependency Isolation

One of the key concepts that helps clarify why Docker is useful is dependency isolation.

If you run a single workload on a single server, dependency isolation is not an issue. Install the workload's dependencies on that server and you're good to go! However, that is usually a very inefficient way to utilize your compute resources.

If you want to install multiple workloads on a single server, dependency compatibility becomes a concern. If you're deploying multiple python applications to a single server and they don't use the same versions of shared python libraries, you have to find a way to isolate them. If any workloads have shared dependencies on packages installed on the OS, those have to be compatible too. Config management tools like Ansible helped manage this challenge, but it still became quite complicated and cumbersome beyond simple use cases. This solution didn't scale well. The more applications you had to manage, the more DevOps professionals you needed to write playbooks or scripts to handle dependency management.

Virtualization

Virtualization was the first big leap in dependency isolation. It allowed us to carve up physical servers into distinct virtual servers that had all the features of the underlying physical server.

Each virtual server has its own kernel and user space - it looks just like a physical server to the workload running there. This opened the door to running different operating systems with different dependent packages installed on a single piece of server hardware.

In this way, dependencies for different workloads running on different virtual servers could be isolated. It led to greater server utilization with dependency isolation.

Containerization

Then came Docker. Essentially, Docker allowed different workloads to share the same kernel while retaining dependency isolation.

Containers allow you to provide all the user space dependencies in an image together with your workload, and deploy that to a server. One container can have the OS packages and python libraries it needs and run alongside another container with different packages and the libraries it needs while sharing the same kernel.

Do We Still Need Virtualization?

This sometimes begs the question: If we have Docker, why do we still need to use virtual machines? In certain use cases, we don't. However, in most cases both work hand-in-hand and provide value in the stack. Both layers of dependency isolation are usually key to optimum operational effectiveness. In essence, using both machine virtualization and containers boils down to separating layers of management concerns. Dividing large physical servers into virtual servers that have multiple containers running on each is similar to a country being divided into state and local governments so that progressively granular concerns can be managed at the right layer.

Not Everything is Containerized

Many organizations still run software that is not containerized. If a company has converted some of their software stack to containers, but still run their critical databases without them, it is still very helpful to carve up physical servers. Some virtual machines run the containerized workloads while others host databases.

Virtualization Enables the "Cloud"

Also, if you rent servers from a cloud provider, virtualization technology is what allows them to provide you a new server on short notice. If each time you needed a new server, you had to get a human to acquire a physical machine and plug it into a data center, you would wait much longer for that server to become available.

Autoscaling

If you're using a container orchestrator like Kubernetes, you are likely using cluster autoscaling to add and remove virtual machines from the cluster as demand dictates. Again, if you had to manage physical servers to horizontally scale the compute capacity of your Kubernetes clusters, there would be nothing "auto" about your scaling.

Container Images

So how do we get these containers running on servers? It all starts with container images. And this is the innovation that really catapulted Docker's adoption. There were user space isolation technologies that predated Docker, but Docker provided a declarative way to define those dependencies for developers: the Dockerfile. The Dockerfile is essentially a clear manifest of all the dependencies a workload has. And it allows you build a container image that can be deployed to any server and run as a container. That is why the source code of most software projects today include a Dockerfile so that a container image can be built and deployed by users.

Things to Know

If you're new to Docker and containerization, here are a few things to keep in mind.

Two Parts to the Equation

There are two parts of containerization that are important to distinguish:

- Container Image: this is the artifact that is built to contain a workload and its dependencies. These are either built manually during testing and development, or in an continuous delivery pipeline for routine software delivery. In either case, they are pushed to a container registry to await deployment.

- Container Runtime: this is the software that runs on a server, pulls images from container registries, and runs the container in its runtime environment.

Not All Containers Are Created Equal

Smaller is better. Before a container can be run on a machine, it must be pulled to that machine over a network. The smaller that image, the faster it can be downloaded to its runtime environment. This becomes important when recovering from failures to minimize downtime.

Also, the more packages that are installed in your image, the more attack surface area it has. Install only those dependencies that are actually needed in your container images to reduce the potential vulnerabilities it may have. For example, the distroless container images offered by Google are a great solution for a base image upon which to install your software.

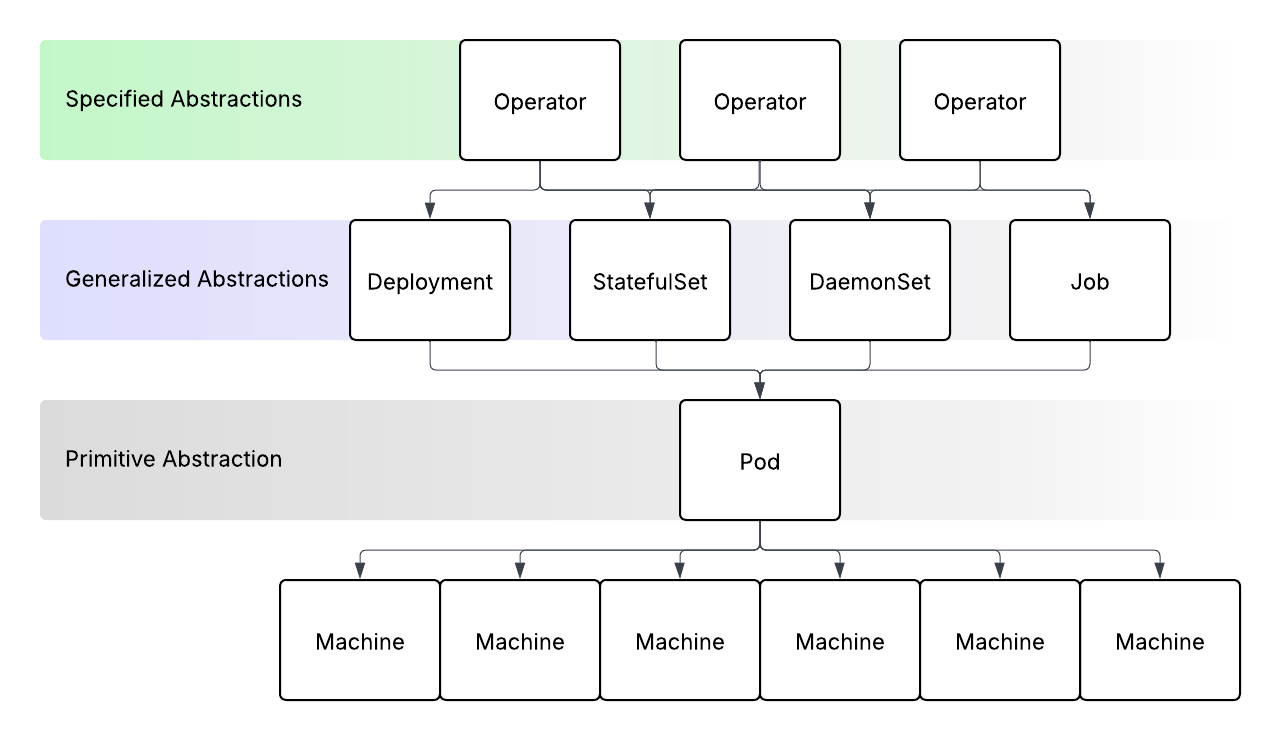

Container Orchestration is a Thing

Containers are a wonderful improvement for managing multiple workloads on each server. It is a great enabler for microservices architecture. However, as the number of distinct workloads under management grows, so does the concern for how to deploy them to servers. This is the reason for the wide adoption of Kubernetes. If you'd like to learn more about Kubernetes, check out our blog post: What is Kubernetes?

More Than Docker

The Open Container Initiative has created industry standards for containerized technology. There are now multiple available solutions for both building container images and for the container runtime. I still use Docker to build images, and so do many others. But it's important to understand there are other options available when you encounter and evaluate them.

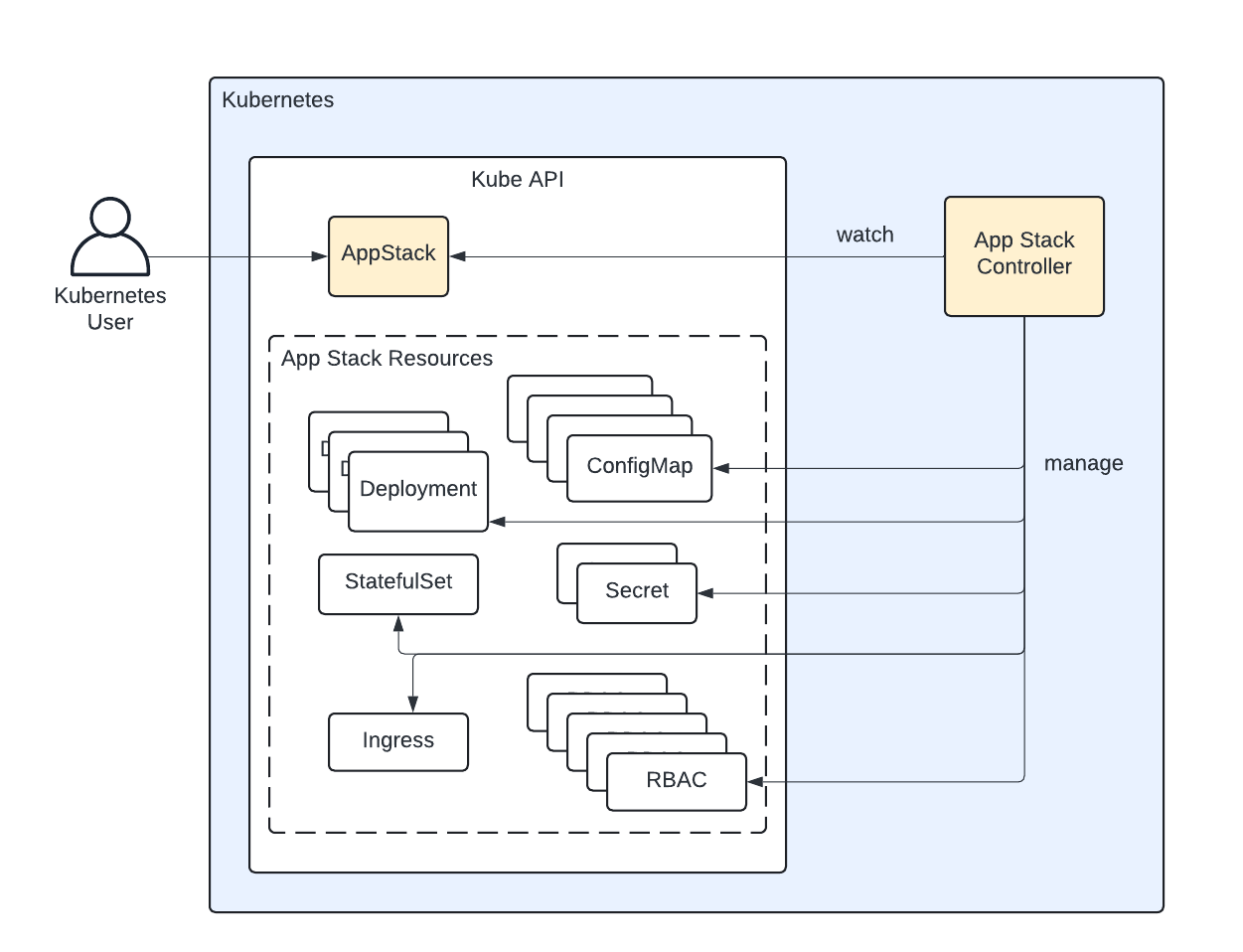

About Qleet

Qleet provides managed Threeport control planes. Threeport orchestrates the delivery of containerized workloads. It manages the cloud infrastructure, Kubernetes clusters, cloud provider managed services and support services that run as containerized services on Kubernetes - all in support of your workload. If you're building and running containerized software, check it out!