What is Kubernetes?

By Richard Lander, CTO | April 5, 2024

Kubernetes is the most capable container orchestration software that has been developed to date. It is a system designed to automate the deployment, scaling, and management of containerized applications across multiple servers, and has become an industry standard component of the server-side software runtime stack. In short, Kubernetes provides a control plane for a cluster of servers so that you can deploy containerized workloads without having to connect to individual servers to install them there.

Kubernetes built on the advancements of Docker. Docker gave us the gift of packaged dependencies. For a deeper dive into containerization and what it did to advance software delivery, check out our blog post: What is Docker?

Before Kubernetes

Once upon a time, we deployed applications directly to servers. Config management tools like Ansible helped greatly, but workload dependency and server inventory management were still quite challenging. Docker solved the dependency management challenge, but those of us that were running Docker in production before Kubernetes were still juggling server inventory and the configuration of servers to spin up and manage our container runtime environments. Container networking between servers was pretty complex and handling machine failures was not easy.

Config management with CLI tools like Ansible required DevOps engineers to connect from their laptop to each remote server to install software and all its dependencies. This was all driven from server inventory and playbooks (text files) on their local machine, usually kept in source control.

Kubernetes Core Value

Kubernetes provided a single API to manage a cluster of servers. Early on, installing Kubernetes on a group of machines was non-trivial, but once that was worked out you could call the Kubernetes API to deploy workloads and it would find the available compute in the cluster for you. We still needed a container networking solution, but when that was installed and configured, the day-to-day container networking was automated as well. It was a huge leap forward in the reduction of toil for delivering containerized workloads to clusters of remote servers. Smaller teams could manage more software delivery.

We enjoyed incredible gains in scalability and resiliency in automated ways. Now a DevOps engineer could connect to the Kubernetes control plane and offload much of what they had to configure themselves previously.

Pluggability

Kubernetes doesn't solve all the problems. It doesn't need to. It provides interfaces for common concerns. The container runtime interface allows you to use any container runtime that suits your needs. The container network interface and container storage interfaces do the same for their respective concerns. This enables the community to develop solutions to plug into Kubernetes.

Extensibility

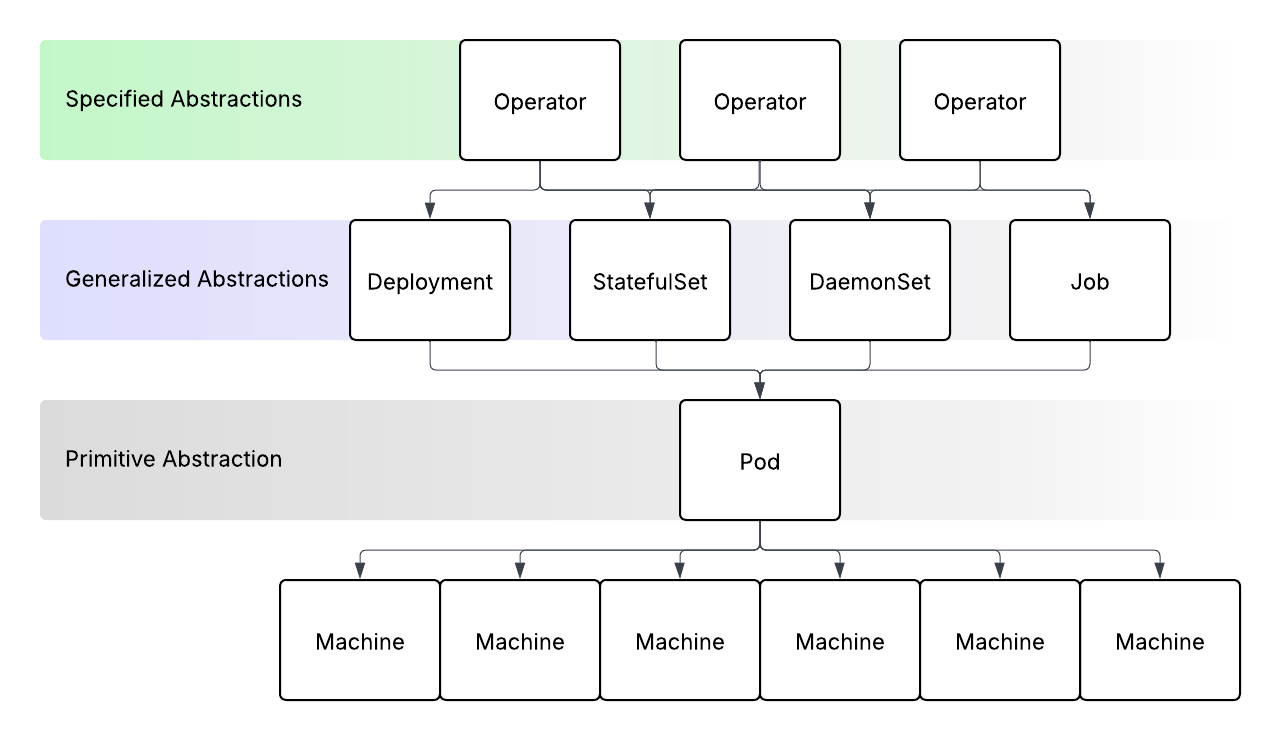

The Kubernetes control plane was designed very well. It consists of a set of controllers that each manage distinct areas and coordinate through the API. It allows sophisticated functionality to be divided into distinct software components, which is great for managing complex concerns.

It also opens the door to tremendous extensibility.

In addition to various interfaces that enable pluggability for core concerns, Kubernetes allows you to provide custom abstractions for your needs. You can add new objects to the Kubernetes API and write custom controllers to manage those objects. This provides almost limitless possibilities in what you can do with your Kubernetes clusters to mange workloads there.

Not All Roses

Kubernetes provides incredible capabilities. But those capabilities come at the cost of complexity. The Kubernetes API is pretty vast. Configuring all your resources for the result you need is often a daunting task. There is a lot to learn and get right to ensure your workloads are happy and behave the way you wish.

Cloud Native Ecosystem

Furthermore, the pluggability and extensibility of Kubernetes have led to a vast number of options in the cloud native ecosystem. Simply figuring out which solutions to use to meet your requirements can be overwhelming. Kubernetes by itself is insufficient. There are many support services needed for network ingress routing, TLS termination, DNS record management, observability, secrets, and more.

Multi Cluster

And while Kubernetes handles intra-cluster concerns very well, very few organizations use a single Kubernetes cluster. Production environments are separated from development and staging environments. Clusters need to be deployed to different locations to be closer to the end user. And now you have a cluster management concern to wrangle. This gets even more complicated if you have applications that are multi-region or you have regional fail over requirements.

Containerized Software Delivery

With a complex system comes complex delivery requirements. Even once you have your resource configuration sorted out, you now have to manage the variables that come with each deployment. Is it a production deployment that requires autoscaling? Which cluster does it belong in? What dependencies does it have, and are they present in the target cluster? A lot of tooling has arisen to solve parts of this but those tools don't handle overlapping concerns well and the configuration of these tools in pipelines is another compounding complexity that adds to the overhead.

More Than Containers

Most applications are more than just the containers that are managed in Kubernetes. They often have managed service dependencies that are run by a cloud provider or other managed service provider. If you use an infrastructure-as-code tool like Terraform to manage cloud provider resources, how do you connect the overlapping configuration concerns with Kubernetes resource management tools like Helm or Kustomize?

The Result

As a result, many organizations have hired large teams of DevOps and platform engineers to manage these complex relationships. Even with these experts, it's challenging to get this all right and provide the right abstractions to your developers for a self-service delivery experience.

Many other organizations have understandably avoided this rabbit hole altogether and gone with a simpler platform-as-a-service (PaaS) solution that allows them to get up and running quickly. That works great until the point they encounter requirements not supported by their PaaS. Then they often find themselves in the unfortunate situation of having to migrate to Kubernetes to gain the capabilities there. Now, they have the complexity of Kubernetes compounded by the challenge of migrating their workloads.

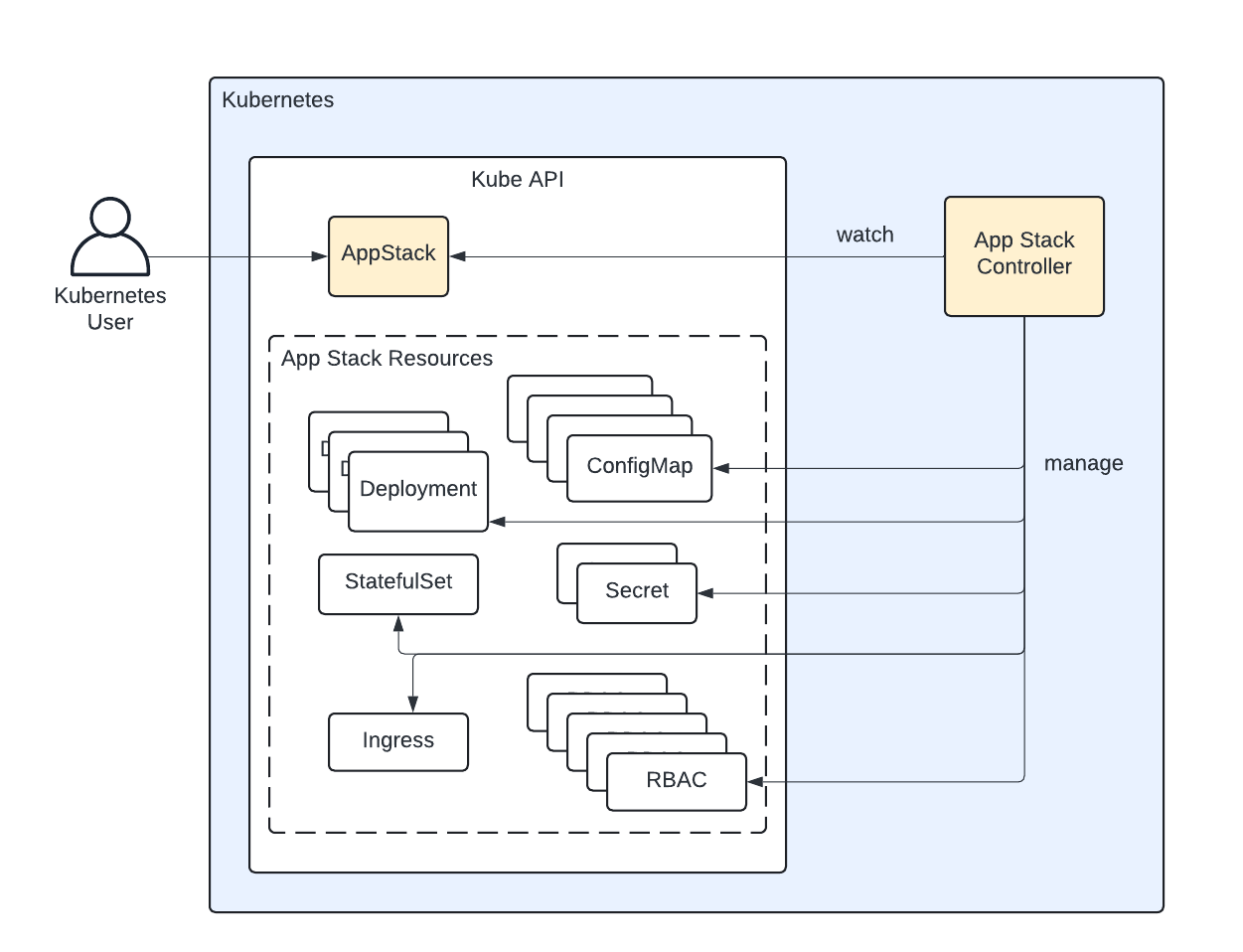

Threeport

We built Threeport to address these concerns. Threeport exists to provide an API and control plane that does for your applications what Kubernetes does for your containers. It orchestrates your application and its dependencies. It manages the cloud infrastructure, Kubernetes clusters, cloud provider managed services and support services that run as containerized services on Kubernetes - all in service of your workload.

Threeport exists to tie all these pieces together so you can deploy your workload with its dependencies and leverage the capabilities of Kubernetes without the overhead of building the entire platform yourself.

Qleet

While it is trivial to deploy and use Threeport for testing and development, Threeport is a complex distributed software system in and of itself. Management, upgrades and troubleshooting are non-trivial. This is why Qleet exists: to provide you with fully managed Threeport control planes. We put cloud-native capabilities within reach of every organization.