What are Microservices?

By Richard Lander, CTO | April 12, 2024

Microservice architecture is the practice decomposing the functionality of an application into distinct workloads that interface with one another over the network. This is also sometimes referred to as distributed systems architecture.

The vast majority of applications are distributed in some small way. A conventional 3-tier web application commonly has the application logic in one workload that calls a database over the network. However, the term microservices generally applies to further distribution of the application logic into multiple components.

What is a Monolith?

Microservices are an alternative to a monolith. A monolith has all business logic in a single workload. It is a much simpler software architecture that keeps all source code in a single repo. This is commonly a good starting point for many software projects. Keeping a single codebase and single deliverable workload has the following benefits:

- Simplicity. There are no API contracts that need to be maintained with other components of the application.

- Performance. There are no network calls between services to degrade performance. If all components are closely co-located on a single machine or on a local network, this may not present a significant concern, but is still worth being aware of.

- Vulnerabilities: The vulnerability surface area is contained to a single workload and the concern about network traffic being intercepted between services is eliminated.

- Troubleshooting: If you have logs and metrics from a single workload to collect and view, this presents a pretty clear-cut monitoring and debugging situation. You don't have the problem of troubleshooting across different running workloads to isolate an issue.

- Data Persistence: There is usually a single data model and single source of truth for persistent data with a monolith. Data consistency and integrity is much more straightforward to maintain. Also, backups and restoration operations are much simpler and less complex to manage.

In short, there are fewer coordination factors to contend with when employing a monolith for your application.

The challenges with monoliths accrue as the application grows in complexity and/or as more developers contribute to the project. The following are common challenges that arise:

- Changes: When multiple contributors are concurrently making changes to the same project, incompatible changes can clash and merge conflicts can become complicated.

- Release Cycles: When there are many changes happening at once, the last change to be completed, tested and approved determines the release date for the software. Release cycles can slow down and some contributors can find themselves waiting on others to get their changes rolled out. This can slow down or complicate work on subsequent changes for future releases.

- Efficiency: In order to coordinate changes and their release cycles, more human coordination naturally follows. More meetings, more design sessions, more time spent coordinating with other team members rather than coding.

- Testing: The automated unit, integration, and end-to-end testing systems can become very complicated for large feature rich projects. This can lead to significant investments in the maintenance of tests to maintain stability.

As requirements for software systems have grown in sophistication, and as team sizes have grown to support these requirements, the case for microservices has become more compelling. If you're encountering some of the challenges with monoliths or looking to avoid them on your next project, and you want to leverage microservices, here are some things to think about.

Data Model

The data modeling is critical for any piece of software but can be even more important if different services each maintain their own data persistence. If different services maintain their own databases, the source of truth for parts of the system can become uncertain. Consider a centralized data persistence service - an API that is responsible for the storage of the state of the system that is accessed by all other components in the system. If there are very clear boundaries between different services and the state they're responsible for, that may constitute an exception, but beware. Having a clear source of truth for the components of your app is critical.

Hub & Spoke vs Chained Microservices

One pitfall in microservices is with chained services. If a user request triggers a front end to call another service to satisfy a request which, in turn, calls another service, which calls another service, with responses cascading back up until the end user receives a response, consider an alternative design. Tracking requests and where problems occurred is going to become a significant challenge. Distributed tracing systems and the service instrumentation to utilize it will become some team members' full time job.

Instead, consider a hub and spoke model whereby orchestration happens through a hub that offloads specific operations to services on a spoke. This provides more flexibility to allow long-running operations to occur asynchronously and call particular services in the right context while returning a faster response to the user. Any error that occurs in a user request will be easier to isolate, as will performance bottlenecks. The API does need to contain logic on what services need to be called in response to which changes, but that centralization of orchestration is quite beneficial. You can also introduce notification systems with queues (rather than direct HTTP requests) between your API and individual services if the need arises. Extending the system to introduce new features becomes more pluggable and each service needs to respect just one contract - with the API - rather than multiple other services.

Observability

When your application's functionality lives across different workloads with different codebases and roles, some commonality will have to be established for the collection of metrics and logs. If you have any kind of chained microservices, distributed tracing using projects like Zipkin or Jaeger to follow the path of a user request will be indispensable. Without a consolidated observability system, your operations engineers will have a very rough time figuring out what happened if something goes wrong, or if performance is degrading and you need to find the bottleneck.

Network Security

If sensitive data is being passed around between services in a microservice architecture, you have a new vulnerability that doesn't generally exist for monoliths. If an intruder gains access to the network being used internally by your application, your company's and/or customer's data may be vulnerable. As such, consider the following:

- Private Network: Ensure the service calls between components occur on a private network that is well protected. This is table stakes. Unless absolutely necessary (say to integrate with a 3rd party provider), keep the network calls private from potential attackers.

- Encryption in Transit: Keeping intruders out is important, but if an intruder does gain access to your private network, don't make it easy for them. If your services communicate via HTTP, using HTTPS internally means attackers need to decrypt the content of those calls in order to gain access to the data.

- Establish Workload Identity: Secure methods of establishing workload identity using mutual TLS will ensure that each request is established as being from a trusted entity.

Testing & CI

With microservices, end-to-end testing becomes indispensable. Unit testing for each component is important but will not necessarily ensure an operation that involves multiple components will work as expected. There are API contracts that will cause failures if broken that must be tested comprehensively.

Take this investment into consideration when employing a microservices strategy.

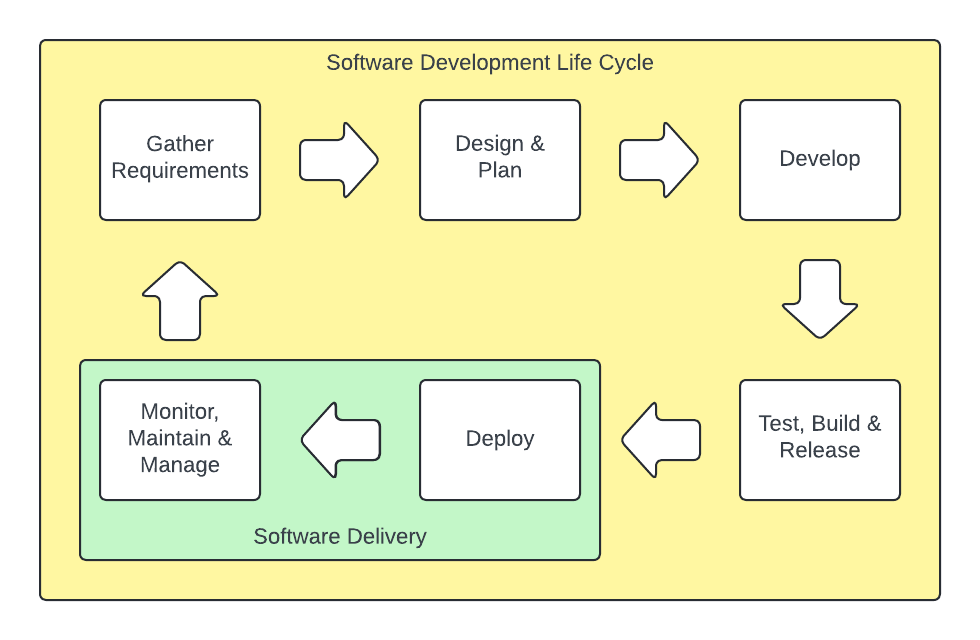

Delivery & Dependencies

Software delivery is challenging. The more distinct services you have, the greater the challenge. Be mindful of what systems you implement for this purpose. Any inefficiencies, complexities, manual toil or brittleness in the system will be multiplied by the number of services under management. If you don't establish common conventions and standardized methods for delivery, this will compound the challenge even further.

Dependency management in delivery can be particularly demanding. Services have dependencies on one another in addition to components that support the system as a whole (think network, observability, secrets) as well as external managed service dependencies and 3rd party providers.

This is another area of engineering investment that must be taken into careful consideration. Any delivery inefficiencies are compounded when multiple workloads are involved.

Example: Kubernetes

Kubernetes is a great example of an implementation of a distributed software system. It has a single database that stores state and that state is accessed through a single API. Each component in the system is a controller that watches for changes that it needs to reconcile. No controller calls another. They all coordinate through the API. The Kubernetes data store, etcd, enables the watch mechanism that the controllers use. While it may not be suitable for your application, the principle is important and can be translated to your use case. Kubernetes components export Prometheus metrics for a common observability interface for that data.

Kubernetes has a specific purpose as a container orchestrator so some of the specifics won't apply to your use case. However, the architecture is very sound and well worth learning from as you design your distributed systems. Check out the architecture docs for that project to learn more.

Threeport

Threeport is an application orchestrator that is well suited to managing the complex workload delivery of microservices. Check it out and consider its capabilities for helping with the delivery of your systems. Even if your application is a monolith, Threeport can elegantly manage all the dependencies it needs to run. And if you ever consider decomposing your monolith into different services, your software delivery will be capable of evolving with your app.

Qleet

Qleet is a Threeport managed service provider. Threeport itself is a complex distributed application and offloading the management and upkeep for it could make a lot of sense for your organization.