What is DevOps?

By Richard Lander, CTO | April 19, 2024

The term "DevOps" is a mashup of "development" and "operations." You can think of it broadly as the development of software operations systems. It includes the management of cloud compute infrastructure and the delivery systems used to deploy and manage software running on that cloud infra.

Before DevOps

Before DevOps, infrastructure and software delivery was often the domain of system administrators. Prior to the broad availability of cloud computing, it was feasible to use manual operations and scripting to manage the configuration of servers and installation of software. When cloud computing became available, organizations no longer needed to purchase servers and install them in data centers. More compute availability led to the deployment of more software. It became very advantageous to use automation to provision cloud infrastructure in a repeatable manner.

Advent of "The Cloud"

As cloud computing became widely used in the 2010's, DevOps grew in popularity. DevOps became the practice that delivered "Cloud Native" software. For a deeper dive into that topic, check out our blog post: What is Cloud Native? DevOps took on the responsibilities for managing a new set of capabilities that were available with cloud computing.

Responsibilities

Cloud Infrastructure

Infrastructure-as-Code (IaC) tools like Terraform became a core tool in the DevOps toolbox. Configs that declared cloud resources and their configurations enabled a new level of automation for compute environments. Different configs for different environments could be stored in git repos and called upon to spin up and down entire application environments.

Remote Server Configuration

Ansible, Chef and Puppet were used prolifically by DevOps to configure servers and install software that was provisioned by IaC systems. This was critical in automating the management of remote server systems. It was no longer feasible to do this one-at-a-time with manual operations. It was too time consuming and error prone.

Delivery Pipelines

Continuous Delivery emerged to automate the deployment and updates of runtime environments. Jenkins was seemingly used everywhere to roll out frequent changes. As a result more code was being deployed and being updated more often than ever before.

Containers & Kubernetes

Docker popularized Linux containers and Kubernetes won the race to orchestrate the management of all the containers that organizations needed to run. DevOps teams now had Kubernetes resource configurations to wrangle and more tools emerged, such as Helm and Kustomize. Then, GitOps platforms like Argo and Flux became the continuous delivery tools of choice with support for Helm and Kustomize.

Tools & Interoperability

The common theme in DevOps has been the construction of systems using different tools combined together to produce the intended results. Expertise in these tools has become indispensable. DevOps as a practice has grown and has become a significant part of many organizations' software engineering endeavors. The sophistication of the software under management has grown as well. And the need for advanced application platforms that meet custom requirements has grown, too.

One of the challenges with using many different tools to comprise an application platform is the interoperability between the tools used in the platform. There are often overlapping concerns. For example, if you define cloud computing infrastructure with Terraform, you need to take outputs from Terraform and plumb them into the Kubernetes resource configurations that are managed by other tools like Helm. Projects like Crossplane have sprouted to unify all application platform concerns into Kubernetes. Everything is defined with custom Kubernetes resources. In principle, this has a lot of merit. In practice with Crossplane, all behavior is defined on the platform with YAML. At that point you need to ask yourself: is YAML the best solution for defining complex behavior in a system? Or are general purpose programming languages the right choice? And this is where platform engineering comes into the picture (see below).

The Ops in DevOps

Since DevOps engineers construct the delivery mechanisms, they are usually the team responsible for operational stability as well. Any incidents at the platform level, at the very least, are on the DevOps team to remedy. Too often, a configuration mistake rather than a core application bug leads to incidents in production. As a result, DevOps team members are usually on call for any production incidents.

DevOps & SRE

Site Reliability Engineering is a practice born at Google that combines platform engineering and operations responsibilities. There is a lot of applicability to DevOps so you'll find a copy of the SRE book on most DevOps engineers' bookshelves.

DevOps & Platform Engineering

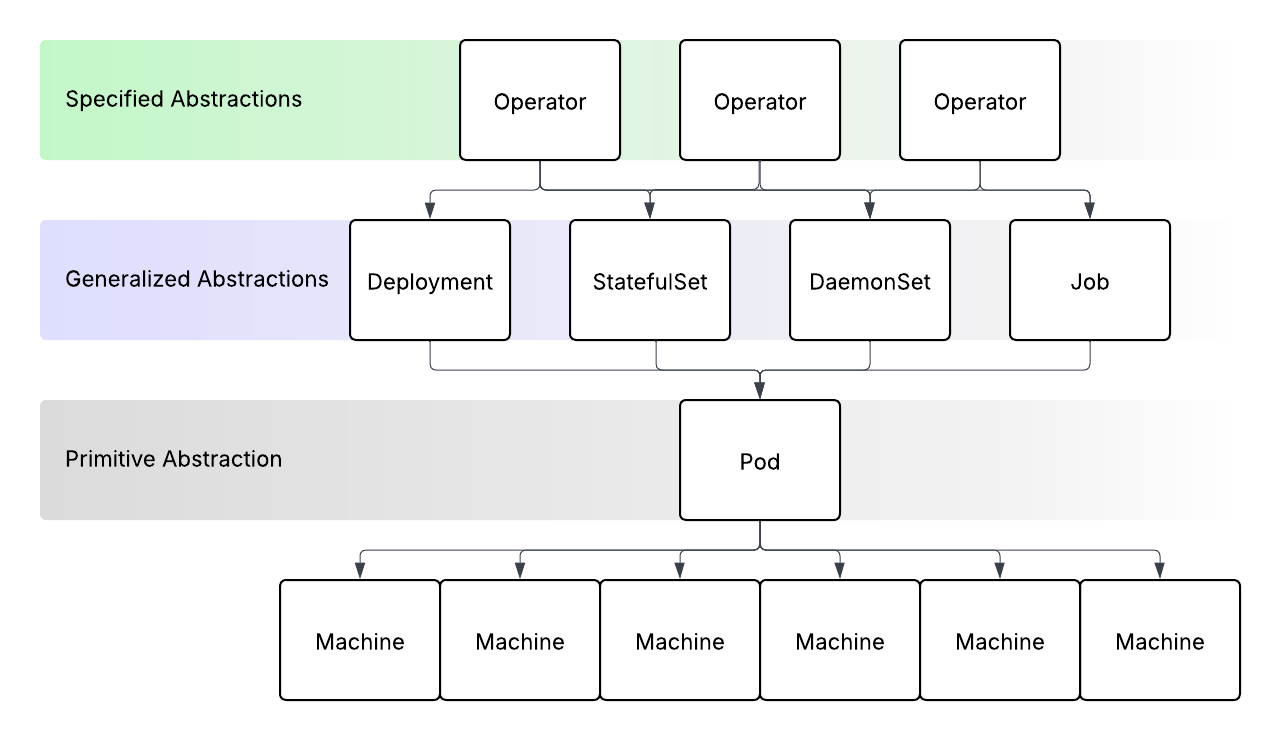

Platform engineering is the discipline of developing software to provide integrations between otherwise disconnected parts of an app platform, and to provide abstractions that make delivery of complex software systems more readily manageable. These abstractions are usually at the Kubernetes layer and involve building Kubernetes operators. These present abstractions in the form of custom Kubernetes resources that can be used to deploy complex apps. They take the operational knowledge of humans and encode them into software that runs in Kubernetes and extends the K8s control plane. For a deeper dive into platform engineering, see our blog post: What is Platform Engineering?

Threeport

Threeport is an application orchestrator that redefines software delivery. It gives DevOps a unified platform to manage the complex concerns they are responsible for. It is open source and freely available to try. Simply download the Threeport CLI from GitHub and refer to the docs for instructions.

Qleet

Qleet offers managed Threeport control planes as a service. For teams looking to take advanatage of Threeport without the overhead of managing, scaling and upgrading Threeport itself, Qleet provides a great solution.